I was very excited at the thought of attending the first edition of the Snowflake customer event In San Francisco last week, and the event did deliver…

Meet the Data Engineers

The event took place in San Francisco and was well attended for a first edition with 1,800 participants. It was sold out a couple of weeks before, according to the organizers. For me, it meant an opportunity to meet Data Engineers and hear about their use case and real life issues. A lot of it has to do with dealing with volume and performance, like for a DB Administrator, but I feel the required knowledge is way more extensive… They have to worry constantly about cost it seems, as Cloud platforms can run out of control. They also have to understand the business purpose, just like an analyst, to ensure they make the right decision when trade-offs occur across performance, cost, data quality, security and more. Their tool sets evolve so fast as well, they better be good learners and attend events such as that Snowflake Summit or even carry on educating themselves through the likes of a data science bootcamp, to make sure they’re always learning new things within their field.

Some of the use cases were surprising to me. For instance, a European TV station assembled a whole team of data scientists to measure through machine learning, emotions on the images they broadcast, and measure the impact of commercials, along the way. I had no idea it was already implemented out of the labs…

A topic that seemed to come up again and again, across organizations, is the rift between data analysts and data scientists. Data Analysts use Excel, SQL and most often Tableau, whereas Data Scientists live in Notebooks with Python on Spark and don’t take SQL seriously. Some smart vendors like Snowflake or DataRobot work on bridging those gaps by trying to bring out the best of both worlds, but there seems to be a lot of misunderstanding. Of course I am biased since I am on the Analyst side, but if I were a Data Scientist, I would at least keep up with the SQL side, because it will prevail eventually: Snowflake already matches performance of Spark, it is just missing libraries…

In the meantime, the task of keeping it together, and translate for each side, falls on the lap of the data engineers, and that’s a lot to ask from them!

Meet the Founders

I got to participate in a brainstorming session organized by Snowflake Product Management, which was well attended. Besides some of the key engineers behind innovations such as the Streams and Tasks, the founder and Architect Thierry Cruanes was in the room. It was great to hear his unfiltered take. There was also a Keynote by the other founder Benoit Dageville, who shared the history and some elements of the road map. It was elevated, instructive and inspiring…

Meet the ecosystem

It’s a common sight at customer conferences, but it was nevertheless very convenient to find most vendors associated with the Snowflake platform, present under one roof. That exhibition hall, available during 3 days, was astutely named the Base Camp. It was great to be able to kick the tires of DataRobot, Fivetran, Stitch, Databricks, Matillion, Sigma Computing, SnapLogic, Segment, Looker (pre-Google), Tableau (pre-SFDC)and more… Notably Missing In Action: Alteryx, Periscope (post-SiSense) and Alooma (post-Google). Those acquisitions are getting quite disruptive in this industry… And I am not even mentioning the rumors floating around…

Meet the new features

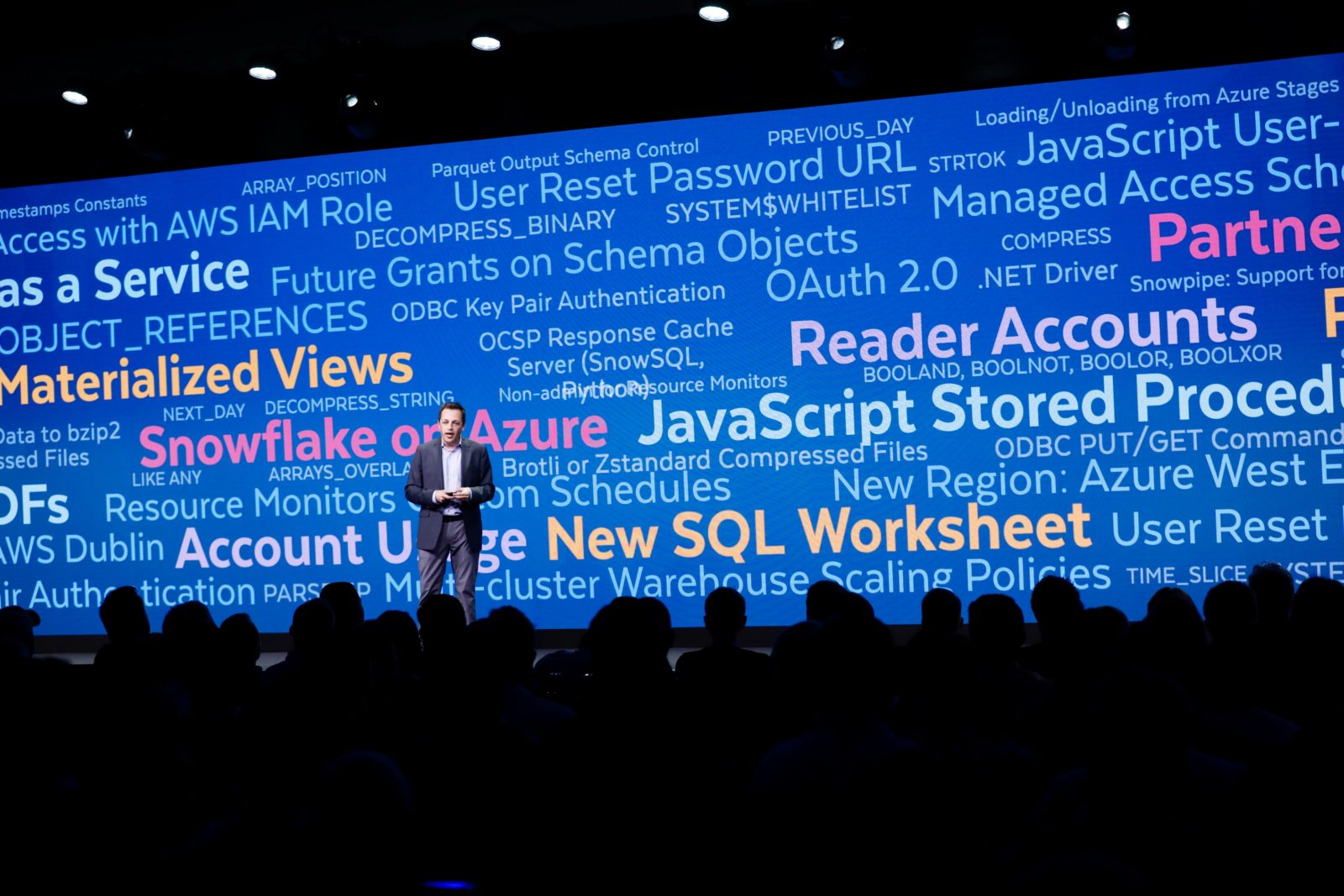

Besides sessions offering technical deep dives, such as Clustering & Pruning or using the Query Optimizer, there were some really exciting announcements, in my ranking order:

- Streams & Tasks: Snowflake completes the continuous pipeline feature started with Snowpipe by giving us free orchestration capabilities! Hear that Tableau? Streams are objects that connect staging tables and downstream target tables following a delta mechanism, with regularly processed logic on a set schedule through a Task, another new object type. Even used separately, those new capabilities really extend the value of the platform, and reduce the dependency on ETL tools. In combo with a service like Stitch or Fivetran, which sync the data to a staging table on autopilot, one can easily use Streams & Tasks to update data-marts and other types of transformation jobs on schedule. ETLs will have to find new ways to provide value…

- Data Exchange: an “App Store” for Snowflake to bring external data sets, in a secure way (hear that Tableau?), easy to setup and bonus that storage is free, since the cost is carried by the provider that shares its data set…

- Availability on Google Cloud: Coming by the end of the year, users can chose to host their Snowflake on AWS, Azure, and now Google cloud. Besides expanding market reach, this will allow users to arbitrage cloud processing costs and switch from one provider to another quite seamlessly, at least that’s the vision…

- Redesigned worksheet: Following the acquisition of Numeracy, Snowflake will turbo charge the capabilities of their in browser worksheet, with the long expected SQL autocomplete, enhanced browse of the schema, folders, automatic data profiles, and even viz capabilities! Take that Tableau! The demo was very convincing, it is coming before the end of the year and should boost the productivity of users tremendously. That would be easily my #1, if it was already available… A small demo is visible in this keynote video, but we saw in the dedicated session that there is way more than that…

Parting Thoughts

I was already a fan of Snowflake as the best solution for data warehouse and this event confirmed my conviction. It provides me an unmatched agility at the best value in my deployments of analytics I perform, even if I use only 20% of its features. The road map is promising, I really like how they are thinking…